Background

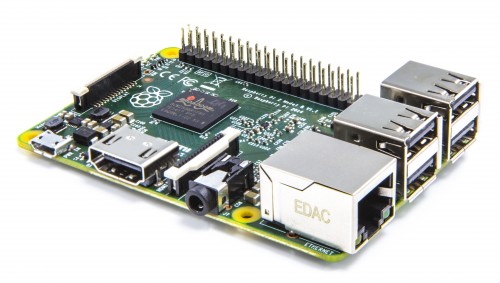

In a prior post (link), I demonstrated a setup which:- A Raspberry Pi streams WebRTC video to anywhere on the internet.

- Can pan horizontally using an additional WebSocket control channel, controlled by a Chrome page which works on Desktop and Mobile.

I have extended that setup so that now:

- I can both tilt and pan (X and Y axis, using a different WebSocket for each)

- Uses a phone's orientation data to dictate the angle each axis should rotate to.

- Exposes a fullscreen WebRTC video which still relays the orientation data.

- Exposes a fullscreen Google Cardboard display mode which also still relays orientation data.

The basic network and communication setup didn't change, and and really only required an additional servo for the Y axis, as well as the incorporation of client-side javascript libraries. The client in this case is Chrome for mobile (Android in my case).

Videos

External view:Internal view:

Screenshots

Below I have a few pictures of the setup, some video of the setup in action, and an explanation of how I extended Client-side functionality.My home-brew tilt-and-pan rig. Just two servos control it, one for X, one for Y.

A close-up shot of the Y-axis servo. Only the highest quality rubber bands and cardboard will do.

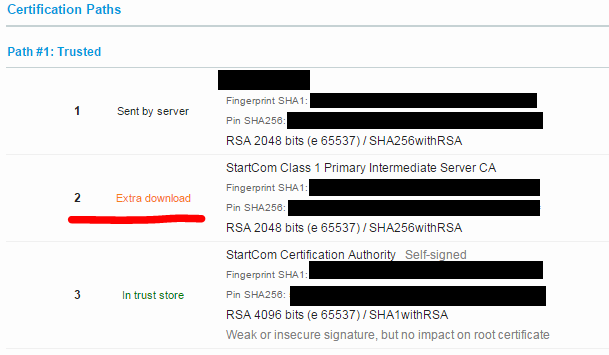

Here is a screenshot of the webpage control. This happens to be on Desktop for screenshotting.

It's a prototyping page, and so a number of controls are exposed:

- The top is some interactive controls to connect and disconnect my WebRTC, and the two WebSocket control channels.

- The video below it is the WebRTC video stream applied to a <video> tag.

- The "Camera Control" box below it lets me switch between manual servo control and Orientation Sensor mode. Full-screen when touched.

- The Cardboard View is another element (more on this later) which displays the split screen view required for viewing the video in Google Cardboard. Full-screen when touched.

Here is the Cardboard View element full-screen on Chrome on my phone. It's displaying the WebRTC video feed in a split-screen.

And that view now in my Google Cardboard viewer (ViewMaster).

And a close-up of the Google Cardboard viewer once closed up and ready to look at.

Client-side functionality

There are a few client-side enhancements that needed to be made to make this possible:- Reading the Orientation Sensor data

- Creating the Cardboard view

Reading the Orientation Sensor data

I had played with the Orientation API (link) as seen around on the web in the past, but found it confusing. Maybe I'm just not understanding it correctly.The reason I found it confusing is because the Orientation API is returning Euler Angles (link), which don't directly give me the data I want. And what it does give me I found difficult to turn into what I want.

What I really want is to get some readings which are more like what a person would intuitively think of as orientation.

That is, if I'm standing up, and I rotate in-place to the right, that could be expressed as a rotation in positive degrees. Negative if I turn left. Let's say that's the X axis.

If I look upward, or downward, that could also be expressed in terms of positive and negative degrees. Let's say that's the Y axis.

In both cases, I have mapped the Orientation Sensor data to X and Y axis rotations to positive and negative degrees, ranging from 90 to -90 in either direction. Useful since that's 180 degrees total, which is basically the range of a servo.

The software running on the Pi is a very simple program which expects to be told a number in the range 90 to -90, and map that to the appropriate PWM calls to move the servo to that location.

Getting the Orientation mapping to work was a challenging task for me and involved actually having to leverage some graphics libraries (Three.js) for some conversion away from Euler Angles (which are apparently used in graphics) and subsequently do some trig. I'm probably doing it wrong.

Creating the Cardboard view

The Google Cardboard viewer requires a split-screen display with each image aligned to the center of the lens for each eye.To accomplish this, I additionally use Three.js and its StereoEffect plugin.

The basic application in javascript is:

- Create a Three scene

- Create a Three renderer (WebGL)

- Wrap the renderer in the StereoEffect effect

- Create a HTML canvas element

- Create a Three texture from the canvas

- Create a 2d plane and apply a Three Mesh to it which has the canvas-backed texture mapped onto it

- When animating

- Get the current video frame from the WebRTC-backed <video> tag, and paint it onto the canvas.

- Indicate to the Three texture that the canvas data has changed.

This leads to a split-screen render where there is a 2D plane which appears to be a video playing in front of each eye.

Final Notes

There are several areas which need to be improved about the whole setup.Latency

There is a bit of delay (sub-second) in movement of the Cardboard viewer and the actual perceived movement of the image in the video.This is going to be a combination of several factors, such as:

- Pure network latency. I'm in NJ, the Pi is in NJ, and I communicate to it via Montreal when using the Internet (as opposed to LAN-only). That's a one-way 720 mile journey.

- On the other hand, even on the LAN the latency isn't much better.

- Latency on the Python program running on the Pi of actually processing messages and moving the servo.

- Latency of the video streaming software (UV4L) pushing out new frames and subsequently being received and displayed on the browser.

I will investigate this in a future post. Latency is not a concern in this prototype but it'd be nice to see where it's all coming from.

The actual tilt-and-pan rig

It's pretty make-shift at the moment. A co-worker pointed me at a properly-built tilt-and-pan rig for less than $9 on Deal Extreme (link). I put in an order and will wait to see how it works.

Not a huge priority actually but probably more stable. Plus the rig comes with two servos so not bad.